linguistics

i think i will just put papers and things i find interesting and then also do a little writing of my own thoughts when it comes to linguistics because i realized that wow, i can say a lot about linguistics, so i can just write that all down. i'm not a professional linguist yet, so these are just my thoughts as a guy who does linguistics and languages a lot.

cool stuff for conlangs

zompist lmao

stuff i found on mastodon

cool stuff for things besides conlangs

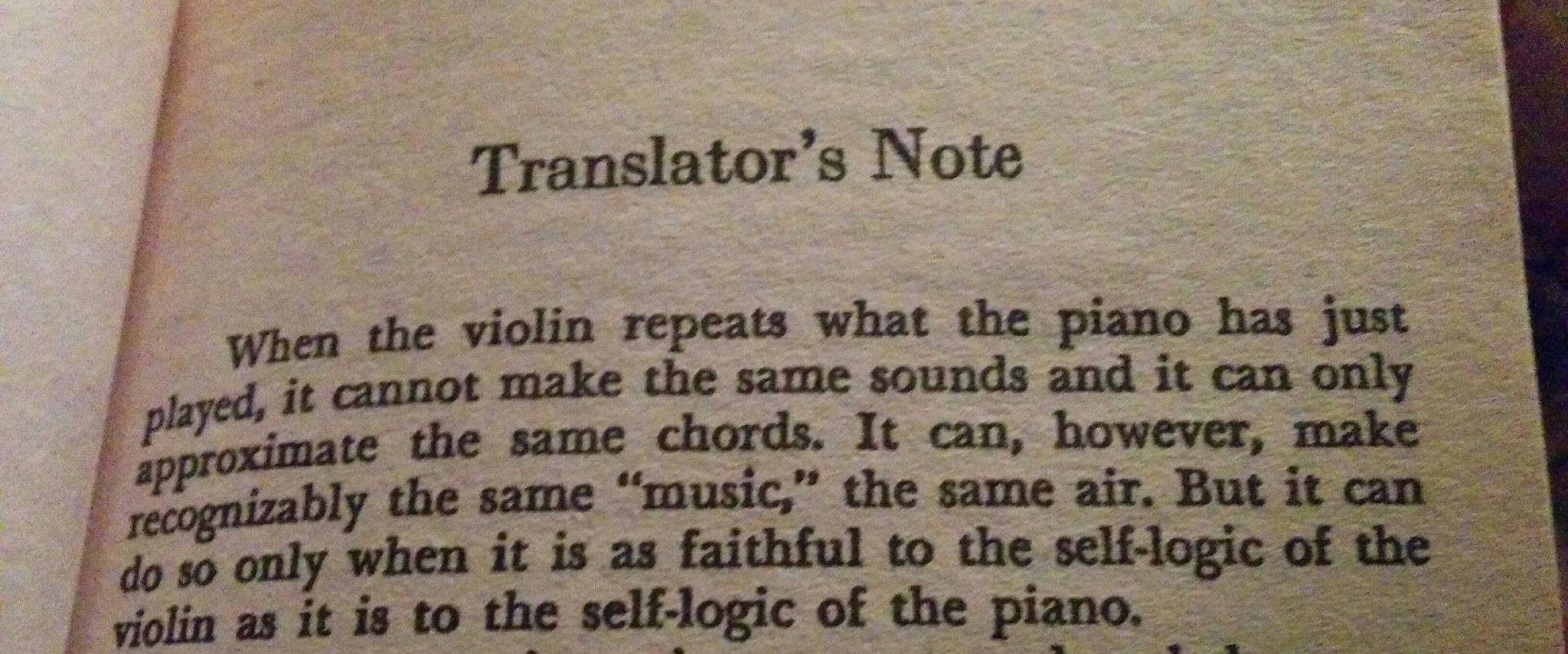

stole this from here on mastodon, but i think this is very cool and a very good description of translation. also reminds me of the fact that there's no actual way to quantify the quality of a translation.

llms, linguistics, languages, and what they have to say about humans

here's the paper whose abstract i read and inspired me to go on this tirade. thankfully, it's open source. you can download it from the download pdf option on the sidebar.

i'll admit, if i can ignore the copyright violations, the plagiarism committed against writers, and the loss of writing jobs, large language models are pretty cool. large language models are things like chatgpt, google's eventual bard, microsoft's bing ai (which is actually based on chatgpt anyway), and pretty much anything that can auto suggest you stuff. yes, that includes your autocorrect and the grammar checks and suggestions that you get from microsoft word and google docs. you might not have noticed them or thought they were llms, but they are. welcome to the world of modern technology.

i say they're pretty cool because they do a thing that we haven't gotten computers to do very well until now, which is mimicking human typing so well that people have been anthromorphizing these llms. now, that's not terribly interesting because humans like to anthromorphize anything we can get our grubby little hands on, but i think it says something about us if people are going as far as dying for their llms. (the case i linked is far from the only one that the news has reported; it's just the easiest one i can find.) there is something that seems so very human about llms as they currently are, which is pretty impressive given that just a few years ago, the best we could get from llms are those stupid "type this word and let autocorrect finish the rest" challenges that you see on instagram and social media still. if you've ever tried it, you know it's terrible. if you haven't, there are plenty of prompts out on the internet.

i put an in text link! i feel so accomplished.

now, that's about as much as i'll say about actually thinking llms are cool. i don't think they're very cool outside of being able to mimic human texts, due to how they're made, how they're used, and honestly, if they're even that useful to begin with. this is where the copyright violations and plagiarism allegations come in and also taking people's jobs because that's obviously the profitable thing to do.

but that doesn't erase the fact that llms are still about language and having computers mimic the very human ability to produce language, albeit only in written form connected to the internet. so the people in this paper i linked, they were refuting another paper with the claim that "Modern language models refute Chomsky's approach to language". i haven't read that paper, but since i'm not particularly inclined to agree, i don't think i will for a while. never mind the fact that there are plenty of issues with "Chomsky's approach to language", i take very heavy inspiration from this paper and say that llms don't actually give us any new insights on how language is learned, produced, and understood by humans. i'll start with the key points of this paper paraphrased, since those are what inspired most of my views on ai and linguistics.

human babies don't need trillions of gigabytes of information to become fluent native speakers. babies start babbling when they're a couple years old (don't ask me for the specifics; i don't remember), and then time flies and you now have a 4 year old telling you that broccoli sends their mouth to outer space. wait a few more years, and now you have on your hands a teenager that says things that could go on r/BrandNewSentence. is your human adolescent consuming the entire internet in their barely over a decade lifespan? of course not. the next question is, how does a human go from knowing nothing about languages to being able to utter and immortalize horrors that would make even god close the tab of his current cosmic fanfiction? the answer is that linguists don't actually know yet lmao, but if linguists haven't cracked the code yet, then what makes you think that computer scientists can with llms? whatever it is we do, llms can't come even remotely close to approximating our sheer linguistic productivity. there, i threw in a word from my intro to linguistics class. making my professor proud!

llms don't actually reflect shit about human minds and cognition. if llms want to refute whatever chomsky said about language and language acquisition, it actually has to do the things about language and language acquisition that chomsky's subjects, humans, would do, which it clearly doesn't. llms can mimic humanlike texts, but the process it gets there is totally different from how humans can type up a cursed text to plague upon their friends, like rats. llms use fancy statistics and computational models to figure out what words and sentences should go next. humans take what they know and express it through words. hell, we don't even know how humans think, let alone go from internal monologues to tweets that go viral on the internet. (that is a subject that is under research actually, how humans can type.) if we can't explain these things about ourselves, how can we expect these llms that didn't acquire their languages the same way we do to show how we acquire language? the abstract has a great analogy: "the implications of LLMs for our understanding of the cognitive structures and mechanisms underlying language and its acquisition are like the implications of airplanes for understanding how birds fly."

the last point of the paper is that it's hard to use the scientific method to evaluate ai performance because it doesn't give explanations for anything. it just predicts. it's an important point, but i just don't have very much to say on it because that's sort of beyond my knowledge and expertise. i sound so smart saying that. instead, i'll link this other paper about how llms can't reverse a = b. this paper ties into the idea that llms don't understand what they're outputting, so of course, it can't do basic logic and reasoning that even a 4 year old could do. i wish i saved the ai logical fallacies page i saw on mastodon, but i can't find it.

i sort of lost my train of thought, but those are a lot of my thoughts about ai and linguistics. friendly reminder that human babies can start making brand new sentences at the tender age of 4 years old (and younger, actually) and llms don't do shit on studying human cognition.